This is a summary of my experiences and achievements in public health field so far when I graduate.

Professional Mission Statement

By being in health fields for 7 years as a student, I deeply know that health is related with every details in our life from national level policies to our daily food consuming. To keep promoting health globally, expertise from different fields should work together.

I am gladly joined the biostatistics master’s program in the City University of New York, School of Public Health and Health Policy and the preventive medicine bachelor’s program in the Fudan University. With these experiences, I acquired understanding of all aspects of health career. Especially in data analyzing, I learned different epidemiology studies designs to deal with different aims of studies and their analyzing strategy. I am able to finish data processing, model building, fitting based on different type and distribution of exposure variable, outcome variable and covariates (predicting models are also understood), and results and data visualization in R, SAS and SPSS. I am familiar with different types of regression (linear logistic, poison, negative binomial), survival analysis (including cox regression), longitudinal data analysis and skills like using propensity score, interaction and weighting.

I hope I can contribute myself to revealing unknown patterns of health and diseases and interpreting professional results to public.

As the informatics technology in health field is maturing, new challenges are also generated for statisticians. I wish I can learn more from different fields and use my knowledge to promote health.

Resume

Personal Information

Name: Ruoyan Han

Email: ruoyan.han94@outlook.com

Tel : 86-18537182018

Home Page: https://ry2an.github.io/

Resume Objective

An experienced new graduate master level student in Biostatistics. Trained by multiple biostatistics epidemiology, and computer science courses and also developed projects with different tools (R, R shiny and ggplot2, SAS, MYSQL, SPSS, python). Fluent in oral and written English.

Education Experiences

- Master of Public Health in Biostatistics

Sept 2018 - June 2020 (expected)

School of Public Health, City University of New York (CUNY SPH) New York, NY, United States

Current Cumulative GPA: 3.76

Description: Statistical tools, methods and special problems and solutions in the public health field are taught. Most data types (cross-sectional, survival or longitudinal) and interested variable types in different types of studies could be handled with my knowledge. Skills of learning from literature reviewing and academic writing are also acquired.

- Bachelor of Medicine in Preventive Medicine

Sept 2013 - June 2018

Fudan University Shanghai, China

Description: Medial courses of clinical medicine and publish health fields are assigned in this program. Trained from basic chemical and animal experiments to community health enhance projects. Awards: Scholarship for excellent students, Fudan University (2014)

- Secondary Major Data Science (Non - Degree)

Sept 2015 – Jan 2017

Fudan University Shanghai, China

Description: Fundamental courses of statistics and machine learning are assigned in this program, also trained with different developing tasks.

Internship Experiences

- Research Intern (Data Group)

June 2019 – Aug 2019

New York University Langone Health Hospital New York, NY, United States

Using R software and SAS to cleaning data from the database of different hospitals and study server

Expand functions of local python script to fix data errors.

Weighting participants’ data to standard population and plotting in R software.

- Department Information Intern

March 2018–April 2018

Center for Disease Control of Putuo District Shanghai, China

According to the ICD-10 classification of cancers, summarized data and calculated measurements of patients of Putuo District in a specified format in Excel to submit to superior.

- Clinical Intern

March 2017–August 2017

Fifth Hospital of Shanghai Shanghai, China

Inquired and recorded disease history and medical tests of patients to help doctors write cases.

Assisting the attending doctors in operations included fastening tissues, vessels, and dropping tools.

Helped hospitalized patients in cleaning wounds and changing the dressings daily.

Skills

Statistics:

Able to implement different univariate and multivariable analyses and interpret results properly. Familiar with different regression models, cox models, and longitudinal regression models.

Capstone project of Master degree: An ecology study on rubella by using dynamic model and Time Series Wavelet analysis.

Course project: Analyzed relationship between self-reported mental status and obesity. Controlling for multiple chronic diseases with propensity score. Measured the validity of independent variable by using Cronbach alpha. All processes of data cleaning and analyzing are addresses on SAS 9.4. (Link: https://ry2an.github.io/2020/05/20/EPID622/)

Repeated academic statistics analyses including: survival analysis (log-rank test and cox model), Poisson and Negative Binomial regression in SAS and R.

Course project: Analyzed game prices and ratings on the Steam store with longitudinal models. Game prices were treated as the time variable and publishers were treated as individuals. Linear mixed effect models and fixed effect models are used to handle continuous outcome and general estimated equation models are used to deal with discrete outcomes. (Link: https://ry2an.github.io/2020/01/11/Use-Longitudinal-Models-Analyzing-Steam-Games/)

Capstone project of Bachelor degree: By utilizing logistic regression in SPSS, the study revealed different factors that can influence the survival time of indwelling needles and their odds ratios on those influences.

Analyzing and Developing tools:

Excellent in R (with R markdown, ggplot2, lattice, xyplot, shiny and statistical testing) SAS, and MS Excel; familiar with, SPSS, Python, and ArcGIS. Able to use github as a version controller. Able to write markdown files. Here are some cases:

[Connecting Four Chess] By utilizing ggplot2 and shiny package in R, a local chess game has been made with user interface and could run by editing and reading local files. (Link: https://github.com/Ry2an/connect_4_chess)

[De Long Research Activity, Fudan University] Embed development by using Python and R software to create a program with user interface and implement some of the map-drawing functions in ArcGIS and used the cancer dataset from annual statistic reports. (Link: https://github.com/Ry2an/hanrygis)

[Crawler Programming] Crawling and describe infectious data from Shanghai Center of Disease Control’s website by using python and regular expression to identify. (Link: https://github.com/Ry2an/crawler_prac)

[Vaccine database business simulator] This program is a practicing assignment of a database course. SQL orders are embedded in visual basic windows to add, delete, edit and search the .mdb file created by Microsoft Access. (Link: https://github.com/Ry2an/VacSys)

Language:

- Fluently speaking and writing in English and Simplified Chinese.

Core Competencies

1. Apply epidemiological methods to the breadth of settings and situations in public health practice

From the biostatistics and epidemiology courses, I not only learned the concepts of study designs and models as statistics students do, I also learned what problems could happen in public health contexts (e.g. missing data, selecting samples) and how to properly handle them.

2. Select quantitative and qualitative data collection methods appropriate for a given public health context

As a student in biostatistics track, I believe the most research that I may meet or execute in my future career would be quantitative analyses. Most statistical analyses will tell researches whether different measurements are ‘significantly’ different with each other and what is the extent of the difference. Both parts of the results are needed for scientific judgements.

For data collection, I learned how to used RedCap system from my fieldwork and course EPID 622 data management.

3. Analyze quantitative and qualitative data using biostatistics, informatics, computer-based programming and software, as appropriate

I have been trained to use R, SAS and SPSS to do statistical analyses. I also learned how to use MySQL by myself. From course EPID 630, I learned client - server structure which is the common structure of health informatics constructions and managing skills of health informatics projects.

4. Interpret results of data analysis for public health research, policy or practice

Interpreting results is crucial in biostatistics and epidemiology. I learned some standard sentence template of interpreting of different measurements (OR, RR, RD, etc.) and different study designs (cohort, cases control, random controlled trials, etc.) in course BIOS 611 and EPID 611. In BIOS 620 and EPID 620, I deeper understood the concepts of the study designs and models. Based on my knowledge, I am able to illustrate the meaning of statistical measurements to audiences with different level.

5. Compare the organization, structure and function of health care, public health and regulatory systems across national and international settings

In course CHSS 610 and HPAM 610, I learned some health structure in the United States, especially in the insurance field. I am able to tell what are mean insurance setting in the United States for different population, relationship between insurance company and policies and how physicians get salaries from patients’ insurance premium.

6. Discuss the means by which structural bias, social inequities and racism undermine health and create challenges to achieving health equity at organizational, community and societal levels

Bias is a common problem of epidemiology and statistics researches. With my experience learn from courses and practices, I am able to tell what are some potential selection bias and confounding effect or ecological fallacy due to races and policies.

Biostatistics and Epidemiology Competencies

1. Identify key sources of data for epidemiologic purposes

I get known several public dataset for public health researching in course EPID 622 and BIOS 623. I also learned what are some institutions that I could ask for data sharing for researches. (e.g. NYC DOHMH)

In my fieldwork, I also learned how to organize data collection from participants.

With the knowledge learned from EPID 630, I realized that the data source of epidemiologic studies is broader than broader. We can know what are some key variable or measurements based on literature review and previous experience. However, the causal network is continuous rather than linking few points.

2. Use measures of disease frequency and association to appropriately describe the distribution and determinants of disease, and appropriately characterize statistical uncertainty around such estimates

Incidence, Prevalence are usually used for describing disease distribution in population. Risk Ratio, Odds Ratio, Risk Difference are usually used for comparing distributions of disease in different populations. With my experience in CUNY SPH, I am able to choose proper measurement based on the health, context, variable type, study design and model type

3. Critically read and evaluate the strengths and limitations of epidemiologic literature from a methodological perspective. Summarize correctly and critically evaluate statistical analyses in published literature

In epidemiology courses during CUNY SPH, students are asked for criticizing papers. Problems in reviewed papers are usually about:

Sample size (Power)

Hypothesis (Confused about difference between interested exposure and other covariates / Confused about difference between predicting model and other causal explaining model)

Interpret of Results

Participants Including and Excluding Criteria

Selection Bias / Internal Validity (Whether data and represent source population) / External Validity (Proper Target Population)

4. Select epidemiologic study designs, data collection techniques, and analytic approaches suitable for different scientific inquiries

Cross-sectional Study, Case control Study, Cohort Study are discussed in epidemiology courses. And special data processing skills or models for variable with different type (continuous, discrete, time-event) are discussed in BIOS courses.

5. Identify key threats to validity (internal and external) within and across epidemiologic studies

Internal validity means whether the information in the dataset can properly represent the information of source population.

External validity means whether the source population can properly represent the target population of the study.

The discussion about validity should start at the beginning of the study. “In what population we want to reveal a association / causal pathway?”

In EPID and BIOS courses, I also learned some strategies to reduce lost follow ups. For example, preparing better gifts. (But also need control the value of the gift to reduce selection bias.) or schedule lower frequencies of meetings.

In course EPID 611, we also discussed about validity measurements like kappa and Cronbach’s alpha.

6. Use statistical software to collect, retrieve, analyze and summarize epidemiologic data. Use information technology and computer software effectively for collection, management, analysis and presentation of public health data.

I have been trained to use R, SAS and SPSS to do statistical analyses. I also learned how to use MySQL by myself. From course EPID 630, I learned client - server structure which is the common structure of health informatics constructions and managing skills of health informatics projects.

For data collection, I learned how to used RedCap system from my fieldwork and course EPID 622 data management.

7. Describe assumptions, procedures, strengths and limitations of statistical methods that are used in public health research. Select statistical methods that are suitable for different purposes of analysis and different types of data. Apply statistical methods correctly in public health research

We discussed theories, advantages, disadvantages, typical using circumstances and study processes of case control study, cohort study, random controlled trial in the courses ad CUNY SPH. And we also learned how to choose tests, measurements, models and recoding process based on the type of variables.

8. Write scientific reports of statistical analyses correctly with tables and figures. Accurately describe computer outputs of those analyses and appropriately interpret the statistical results.

I have been trained with writing assignments in different course at CUNY SPH. There are some examples in the Academic Reports part of this portfolio.

9. Orally present statistical findings clearly and effectively

I am able to present academic reports, statistical results to different audiences. Here are some of my presenting experiences:

I also have a video presenting record for EPID 622. Link: (https://www.bilibili.com/video/BV1D54y1Q77m)

Experience in Public Health

CITI Program Certificate

- I passed the COLLABORATIVE INSTITUTIONAL TRAINING INITIATIVE (CITI Training) before I started my fieldwork.

Verify at (www.citiprogram.org/verify/?w9d34f73f-136b-4fb7-b4ab-2bc54b4c440b-31229708).

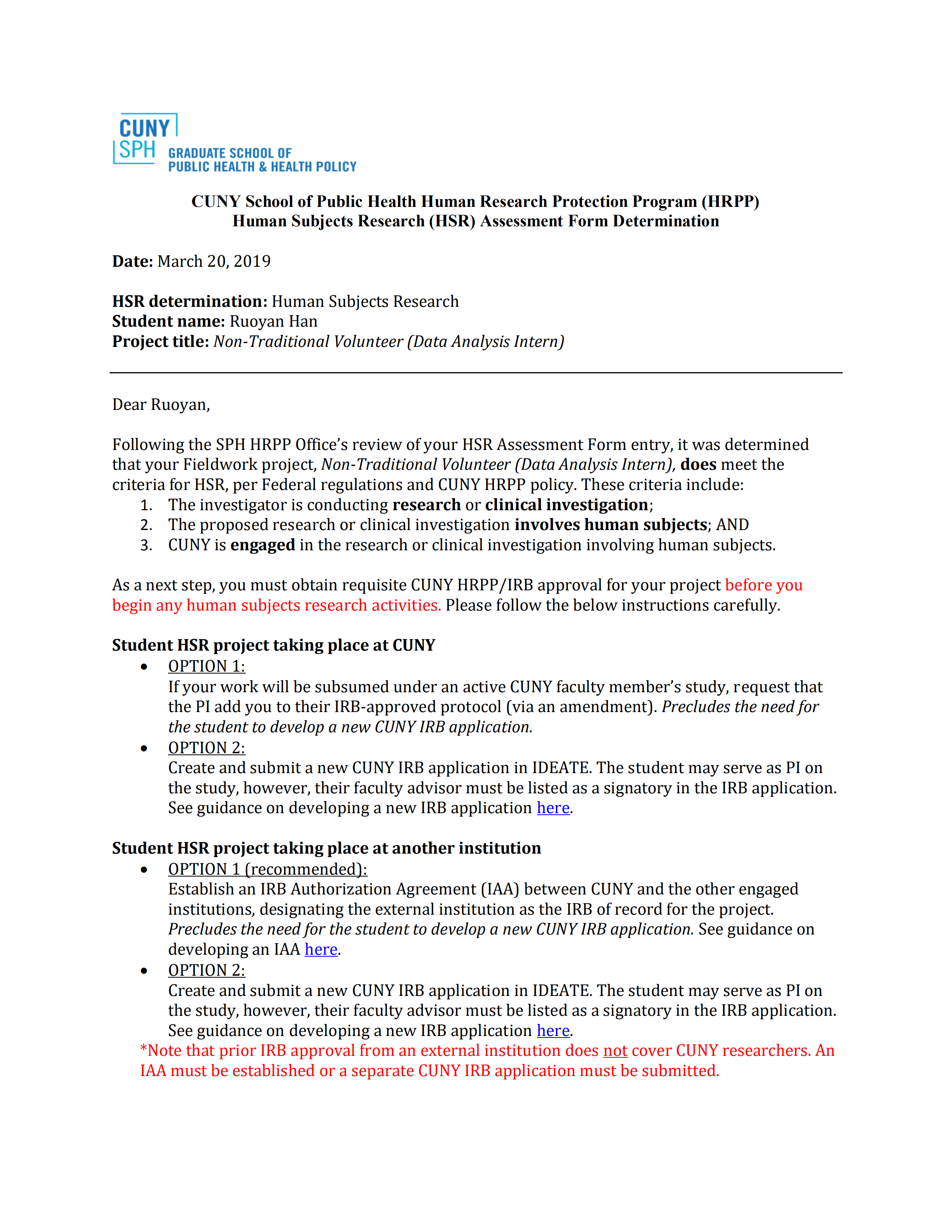

IRB determination Letter from CUNY SPH

This is the IRB determination Letter from CUNY SPH for my fieldwork.

Fieldwork Experience

- Volunteer in NYU Langone Health (2019)

- Introduction

In June to August 2019, I got a chance to work in the Department of Pediatric and Environment, NYU Langone Health. This experience was also count as the fieldwork course in CUNY SPH.

- Responsibility

Using R software and SAS to cleaning data from the database of different hospitals and study server

Expand functions of local python script to fix data errors. Weighting participants’ data to standard population and plotting in R software

- What I learned

Two things I learned from this experience

Always processing data with code. (Excel operations cannot always be repeatable)

Write daily work summary. Focusing on building and executing plans.

Clinical Intern in 5th Hospital of Shanghai (2018)

- Responsibility

Inquired and recorded disease history and medical tests of patients to help doctors write cases.

Assisting the attending doctors in operations included fastening tissues, vessels, and dropping tools.

Helped hospitalized patients in cleaning wounds and changing the dressings daily.

Volunteer in department of Health, Putuo, Shanghai (2018)

It was a very short experience. Together with officers in department of Health of Putuo district, my classmate and I went to supervising smoking banning status of buildings in Putuo district. We visited gyms, hotels and office buildings and learned strategies of banning smokes (including posters, slogans, signs, preaching, supervising and fines).

Internship in CDC, Putuo, Shanghai (2018)

- Responsibility

According to the ICD-10 classification of cancers, summarized data and calculated measurements of patients of Putuo District in a specified format in Excel to submit to superior.

Community Health Education Experience (2017)

As a course assignment, I took part in a health education event in one community in Shanghai. It was a lecture about cardiovascular diseases. My job was to deliver gifts (waters, towels, and cloth detergent to the participants).

Survey of Informatics Systems Ultilizing (2016)

henan_summer_survey

Thanks for the supporting of summer events of Fudan University, I lead few of my classmates interviewed officers whom I previously contacted in departments of health in Henan Province.

I wrote a brief summary after the event. We are surprised by the development of informatics technology in health field, but we also saw spaces of promotion in future works. (Full Text Link: https://ry2an.github.io/2016/09/01/summer-survey-2016/)

Academic Reports (Course Assignments)

Here are some academic reports that I wrote as course assignments (Full texts added in the Appendix):

- EPID 622 Assignment:

Title: Exploring Association Between Self-Reported Mental Health and Obesity by Using Propensity Score to Adjust Chronic Diseases

Link https://ry2an.github.io/2020/05/20/EPID622/

- BIOS 621 Assignment:

Title: Repeat Academic Poisson and Negative Binomial Regression

Link: https://ry2an.github.io/2020/03/05/Repeat-Academic-Poisson-and-Negative-Binomial-Regression/

- BIOS 621 ASSIGNMENT:

Title: Repeat Academic Survival Analysis

Link: https://ry2an.github.io/2020/03/04/Repeat-Ancademic-Survival-Analysis/

- BIOS 623 Assignment:

Link: https://ry2an.github.io/2020/01/11/Use-Longitudinal-Models-Analyzing-Steam-Games/

Appendix

Exploring Association Between Self-Reported Mental Health and Obesity by Using Propensity Score to Adjust Chronic Diseases

Introduction

Obesity is a risk factor for many significant morbidity1. The burden has been increasing since 1990 but still does not have a slowing down trend2,3. Up to now, in the United States, the burdens of obesity have been increasing and reached about 20% to 30% in different states4. On the other hand, there are many risk factors for obesity. One major risk factor of obesity claims by previous studies is mental health status. People who have mental illnesses could have 2 to 3 times risk of having obesity5. We will discuss the relationship between obesity and mental status in this paper.

Controlling for confounders is a normal considering when we want to measure the relationship between to conditions, and so do this time. There are also confounders between obesity and mental status. Both obesity and mental status are related to many chronic diseases. Hypertension, Dyslipidemia, and Diabetes1. Hypothyroidism6-8, Cushing’s Syndrome9,10, and polycystic Ovary Syndrome11 could cause a weight change of their patients. Besides, some drugs like Antidepressants12, Antipsychotics13, Sulfonylureas14, Thiazolidinediones14, Insulin14, Antiepileptic drugs15, Corticosteroids14 and Beta-blockers14 which are used to cure mental diseases, diabetes, hypertension, epilepsy, cardiovascular diseases, and autoimmune diseases can also cause a weight change. In another direction, chronic conditions have also been proved as a risk factor of mental illnesses16,17. Therefore, to have an accurate estimation of the relationship between mental status and obesity, we need to adjust to chronic diseases as confounders.

However, there is a problem between these confounders. Chronic diseases are usually related to each other. Some typical examples are diabetes and hypertension18; diabetes and asthma19; hypertension and kidney failure20, etc. If covariates are not independent with each other, it will influence the accuracy of the coefficient parameter21. The classical process would choose to use stepwise strategy and remove co-linear items22 which could lose usage of collected data.

Propensity score23 is an approach that could help with the co-linearity covariates without eliminating them out of the data analysis24,25. The propensity score is the predicted probability for individuals in the dataset of being exposed by giving confounding values. One way to utilize the score is to adjust for the covariates. In observational studies, individuals are typically clustered into different exposure groups with different probabilities, where the confounding can occur. Therefore, by controlling for the propensity score, confounders can also be adjusted23.

In this study, regression models would be fitted to test on what extent that propensity score could enhance the relationship estimation between self-reported mental status and obesity. Based on previous studies, we expected that the adjusted relationship would be positive, which means people with poorer mental status could have more chances to get obese.

Method

Data

Dataset of NYC HANES 2014-2014 (New York City Health and Nutrition Examination Survey)26 was used in the analyses. The dataset includes participants from households who live in five boroughs, over 20 years old, and non-institutionalized. There were two layers in the sampling strategy of this dataset. In the first layer, NYC was geologically recognized as 6236 segments and 144 segments are randomly picked. In the selected segments, 0 to 2 household adults were randomly selected based on how many eligible adults are living in the houses within the 144 segments. The reason to select by houses is to minimize the household-to-household clustering. The total response rate was 36%. 1524 participants finished the survey.

Several variables in the NYC HANES dataset were used in this analysis:

‘Self-reported mental condition (MHQ_1)’ was chosen as the interested exposure. It is a 5-categories choice question. In some of our analysis, we transformed it into a binary variable by whether the self-reported mental status is ‘poor’ or not. ‘Body Mass Index (BMI)’ is a measurement that already generated in the dataset. The outcome variable in this analysis ‘whether obese’ was created based on this variable. If one participant’s BMI was over 30, he would be marked as ‘obese’. Five chronic diseases covariates were considered in the analysis: ‘whether having high blood pressure (BPQ_2), ‘whether having diabetes or sugar diabetes (DIQ_1)’, ‘whether having cancer or malignancy (MCQ_14)’, ‘whether having asthma (MCQ_1)’ and ‘whether having failing kidneys (MCQ_12)’. Besides, six mental health questions (MHQ_2 to MHQ_7) were used for validity tests. Age and Gender were used as common confounder and modifier. (Details of questions and items used in this analysis would be added to the appendix.)

Cronbach alpha

To check the validity of the outcome variables, self-reported mental health, Cronbach alphas are calculated. There are seven questions (MHQ1 - MHQ7) that asked all participants about their mental mood. The first question was picked as the outcome. Cronbach alpha is calculated based on the answers of the seven questions to see whether they have a good consistency. During data processing, we re-coded question MHQ-1 to let it have the same direction with the other six questions of representing mental status from better to worse rather than from worse to better.

Weighting Sample

Two parts of the survey results were covered in our analyses: computer interview and physical tests, which correspond to two weights in the dataset (CAPI_WT and EXAM_WT). The sample size of the computer interview is 1,527 and the sample size of the physical tests is 1500. In the regression analyses, participants with missing data are removed. Therefore, the weight of the physical tests, which have lower samples, was used to adjusting in the models.

Univariate Analysis and Multivariate Analysis

In the univariate analysis part, to comparing regression coefficients of the chronic diseases, we also added age and gender into the models:

(model 1)

(model 2)

(model 3)

(model 4)

(model 5)

(model 6)

Multivariable regression model included all covariates in the univariate analysis:

(model 7)

Logistic regression with Propensity Score

The propensity score is the estimated probability of exposure based on covariates, which could be measured with the following model:

(model 8)

After the score is calculated, we could remove the original covariates and replace them with the score:

(model 9)

Tools

All data processing and analysis are finished in SAS 9.4.

Results

Descriptive Analysis

Table 1. Covariates Distribution Among Different Outcome Status

This table shows the distribution of covariates that include in the analyses among participants with different obesity status. ‘Mental Status: Poor’, which is the binary-transformed variable from ‘Mental Status’ is the exposure variable in the following analyses.

Questionnaire Validity

We calculated the Cronbach alpha for seven mental questions that asked all participants (MHQ_1 to MHQ_7). The alpha of answers to the seven questions is over 0.8, which indicates that they have a high consistency. Any banning of the variables could cause a reduction of the alpha value.

Table 2. Cronbach Alpha for Mental Status Questions

| Question | Alpha after banned this question |

|---|---|

| Mental Status Level (Excellent/ Very Good/ Good/ Fair/ Poor) | 0.84 |

| Past 30 days, felt nervous level (All/ Most/ Some/ A little/ None of the time) | 0.84 |

| Past 30 days, felt hopeless or not (All/ Most/ Some/ A little/ None of the time) | 0.82 |

| Past 30 days, felt restless or fidgety or not (All/ Most/ Some/ A little/ None of the time) | 0.83 |

| Past 30 days, felt depressed, no cheerin or not (All/ Most/ Some/ A little/ None of the time) | 0.82 |

| Past 30 days, felt everything an effort or not (All/ Most/ Some/ A little/ None of the time) | 0.83 |

| Past 30 days, felt worthless or not (All/ Most/ Some/ A little/ None of the time) | 0.82 |

| Total Alpha | 0.85 |

Classical Regression Analysis

Table 3 shows the result of univariate analysis and multivariable regression. Parameter of diabetes and kidney failure have obvious changes between the univariate model and the multivariate model. Other covariates and exposure did not change a lot in the multivariate model. P-value of exposure increased.

Regression with Propensity Score

The propensity score, which means the probability of exposure estimated by confounders, is fitted for each individual with a logistic regression model. Table 3 compares how the regression coefficient of self-reported mental status change between classical regression and regression using the propensity score.

To have a better view of natural log odds distribution under 5 original different self-reported status, two regression models with ordinal exposure degree were fitted and shown in Table 4.

Table 3. Regression models for the relationship between Self-reported Mental Status and Obese

Table 4. Regression with Five-Degree Exposure

Note: The reference category of exposure was mental status equal to “poor”.

Discussion

The validity of the exposure question.

Since the exposure in this study is the self-reported mental status. The validity of this question needed to be checked. From the Cronbach’s alpha in Table 2, we can see that the total alpha of the questions was over 0.8, which means the consistency of these questions was high and they were not results that participants randomly made. Besides, by removing any of the questions, the total alpha would reduce, which means the answers to these questions were unique.

Relationship between self-reported mental status and obesity.

The result from regressions with original confounders and propensity scores in Table 3 are similar. The odds of being obese is lower among people whose self-reported mental health status is ‘poor’. Results in Table 4 indicate that this relationship is becoming stronger when the self-reported mental status is lower. In models using the propensity score, this relationship is confounded by age, older people have less chance of being obese, which is opposite to the initial hypothesis from the literature review. We can also reject the hypothesis that this relationship is not modified by gender on 0.05 critical criteria. By controlling for other covariates, the natural log odds of obese males are 1.75 higher than females. The model also showed the confounding effect of hypertension and asthma. People with these chronic diseases could have lesser odds of being obese.

However, the causal property is hard to extract from the results. Firstly, the NYC HANES is a cross-sectional survey that does not have temporal order in different variables. Secondly, the causal direction, in this case, is unclear. To illustrate the causal pathway, a cohort study would a better design.

Effect of using propensity score approach in regression.

The regression coefficient of exposure did not change a lot in models with propensity score. However, since the standard error of the parameter reduced a little bit, the p-value was also reduced. By comparing the regression parameter of chronic diseases from univariate analysis to multivariable analysis, we can notice that some chronic disease changed their regression coefficient and p-values like diabetes and kidney failure, this means these chronic diseases are actually related to each other. Besides, the regression coefficient and p-value of age as a confounder also changed in the model with propensity score, which could reduce biases from chronic diseases.

Limitation

The propensity score was not showing its power in this case obviously. By using the propensity score approach to control for confounders, it can also resolve two problems other than correlations between confounders: the unacceptably large number of covariates and unknown confounders. If we could have more records about chronic disease, the difference between classical multivariable analysis and regression with propensity score could be more obvious.

Besides, the number of exposed individuals was relatively low in this analysis. It could influence the power of the analyses which needs to be concerned if the result were not statistically significant. And it may also reduce the precision of our results.

Conclusion

Among people who live in New York city and older than 20 years old and have ‘poor’ self-reported mental status is negatively related to obesity. By controlling for age and chronic diseases, the odds of being obese for people whose self-reported mental status is ‘poor’ is 0.35 (95%CI: 0.13, 0.93) times than other people.

The propensity score approach in this analysis did not improve the model obviously.

Reference:

Heart N, Lung, Institute B, Diabetes NIo, Digestive, Diseases K. Clinical guidelines on the identification, evaluation, and treatment of overweight and obesity in adults: the evidence report. National Heart, Lung, and Blood Institute; 1998.

STATEofCHILDHOODOBESITY. Adult Obesity Rates. In:2018:https://stateofchildhoodobesity.org/adult-obesity/.

Behavioral Risk Factor Surveillance System Survey Data. 2018.

Prevention CfDCa. Adult Obesity Prevalence Maps. https://www.cdc.gov/obesity/data/prevalence-maps.html#overall. Published 2018. Accessed May.10.2020, 2020.

Avila C, Holloway AC, Hahn MK, et al. An Overview of Links Between Obesity and Mental Health. Curr Obes Rep. 2015;4(3):303-310.

Pearce EN. Thyroid hormone and obesity. Curr Opin Endocrinol Diabetes Obes. 2012;19(5):408-413.

Kitahara CM, Platz EA, Ladenson PW, Mondul AM, Menke A, Berrington de Gonzalez A. Body fatness and markers of thyroid function among U.S. men and women. PLoS One. 2012;7(4):e34979.

Laurberg P, Knudsen N, Andersen S, Carlé A, Pedersen IB, Karmisholt J. Thyroid function and obesity. Eur Thyroid J. 2012;1(3):159-167.

Kirk LF, Jr., Hash RB, Katner HP, Jones T. Cushing’s disease: clinical manifestations and diagnostic evaluation. Am Fam Physician. 2000;62(5):1119-1127, 1133-1114.

Tiryakioglu O, Ugurlu S, Yalin S, et al. Screening for Cushing’s syndrome in obese patients. Clinics (Sao Paulo). 2010;65(1):9-13.

Legro RS, Arslanian SA, Ehrmann DA, et al. Diagnosis and treatment of polycystic ovary syndrome: an Endocrine Society clinical practice guideline. J Clin Endocrinol Metab. 2013;98(12):4565-4592.

Uguz F, Sahingoz M, Gungor B, Aksoy F, Askin R. Weight gain and associated factors in patients using newer antidepressant drugs. Gen Hosp Psychiatry. 2015;37(1):46-48.

Galling B, Calsina Ferrer A, Abi Zeid Daou M, Sangroula D, Hagi K, Correll CU. Safety and tolerability of antidepressant co-treatment in acute major depressive disorder: results from a systematic review and exploratory meta-analysis. Expert Opin Drug Saf. 2015;14(10):1587-1608.

Velazquez A, Apovian CM. Pharmacological management of obesity. Minerva Endocrinol. 2018;43(3):356-366.

Ness-Abramof R, Apovian CM. Drug-induced weight gain. Drugs Today (Barc). 2005;41(8):547-555.

Brown HK, Qazilbash A, Rahim N, Dennis CL, Vigod SN. Chronic Medical Conditions and Peripartum Mental Illness: A Systematic Review and Meta-Analysis. Am J Epidemiol. 2018;187(9):2060-2068.

Brown HK, Wilton AS, Ray JG, Dennis CL, Guttmann A, Vigod SN. Chronic physical conditions and risk for perinatal mental illness: A population-based retrospective cohort study. PLoS Med. 2019;16(8):e1002864.

Krzesinski JM, Weekers L. [Hypertension and diabetes]. Rev Med Liege. 2005;60(5-6):572-577.

Baek JY, Lee SE, Han K, Koh EH. Association between diabetes and asthma: Evidence from a nationwide Korean study. Ann Allergy Asthma Immunol. 2018;121(6):699-703.

Gargiulo R, Suhail F, Lerma EV. Hypertension and chronic kidney disease. Dis Mon. 2015;61(9):387-395.

Wold S, Ruhe A, Wold H, Dunn I, WJ. The collinearity problem in linear regression. The partial least squares (PLS) approach to generalized inverses. SIAM Journal on Scientific and Statistical Computing. 1984;5(3):735-743.

Weitzen S, Lapane KL, Toledano AY, Hume AL, Mor V. Principles for modeling propensity scores in medical research: a systematic literature review. Pharmacoepidemiology and drug safety. 2004;13(12):841-853.

Haukoos JS, Lewis RJ. The Propensity Score. Jama. 2015;314(15):1637-1638.

Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70(1):41-55.

D’Agostino Jr RB. Propensity score methods for bias reduction in the comparison of a treatment to a non‐randomized control group. Statistics in medicine. 1998;17(19):2265-2281.

Perlman SE, Charon Gwynn R, Greene CM, Freeman A, Chernov C, Thorpe LE. NYC HANES 2013-14 and Reflections on Future Population Health Surveillance. J Urban Health. 2018;95(6):777-780.

Appendix:

Questions used in the analysis:

MHQ_1

Now thinking about your mental health, which includes stress, depression and emotional problems, would you say your overall mental health is excellent, very good, good, fair, or poor?

1: Excellent

2: Very good

3: Good

4: Fair

5: Poor

.D: Don’t know

.R: Refusal

.: Legit skip

MHQ_2

During the past 30 days, how often did you feel nervous?

1: All of the time

2: Most of the time

3: Some of the time

4: A little of the time

5: None of the time

.D: Don’t know

.R: Refusal

.: Legit skip

MHQ_3

During the past 30 days, how often did you feel hopeless?

1: All of the time

2: Most of the time

3: Some of the time

4: A little of the time

5: None of the time

.D: Don’t know

.R: Refusal

.: Legit skip

MHQ_4

During the past 30 days, how often did you feel restless or fidgety?

1: All of the time

2: Most of the time

3: Some of the time

4: A little of the time

5: None of the time

.D: Don’t know

.R: Refusal

.: Legit skip

MHQ_5

During the past 30 days, how often did you feel so sad or depressed that nothing could cheer you up?

1: All of the time

2: Most of the time

3: Some of the time

4: A little of the time

5: None of the time

.D: Don’t know

.R: Refusal

.: Legit skip

MHQ_6

During the past 30 days, how often did you feel that everything was an effort?

1: All of the time

2: Most of the time

3: Some of the time

4: A little of the time

5: None of the time

.D: Don’t know

.R: Refusal

.: Legit skip

MHQ_7

During the past 30 days, how often did you feel down on yourself, no good or worthless?

1: All of the time

2: Most of the time

3: Some of the time

4: A little of the time

5: None of the time

.D: Don’t know

.R: Refusal

.: Legit skip

BPQ_2

Has SP ever been told by a doctor or other health professional that SP had hypertension, also called high blood pressure?

1: Yes

2: No

.D: Don’t know

DIQ_1

(Other than during pregnancy, ) Has SP ever been told by a doctor or health professional that SP has diabetes or sugar diabetes?

1: Yes

2: No

3: Borderline or Prediabetes

.D: Don’t know

MCQ_1

Has a doctor or other health professional ever told SP that (you have/s/he/SP has) asthma?

1: Yes

2: No

MCQ_12

Has SP ever been told by a doctor or other health professional that SP had weak or failing kidneys? Do not include kidney stones, bladder infections, or incontinence.

1: Yes

2: No

.D: Don’t know

MCQ_14

Has SP ever been told by a doctor or other health professional that SP had cancer or a malignancy of any kind?

1: Yes

2: No

Repeat Academic Poisson and Negative Binomial Regression

Author: Ruoyan Han

Code of this project is avaliable at: https://github.com/Ry2an/case_code/tree/master/bios623as2

Introduction

In 1996, the Australian government exempted a new gun law that banned semiautomatic rifles and pump-action shotguns and started to buyback firearms. Chapman, et al, did an analysis to reveal whether the law change had brought changes in death rates for different reasons related to gunshot [1]. This document is the assignment of BIOS 621 and I will repeat one outcome (total non-firearm death rate) in Chapman, et al’s article with same statistical methods and compare the usage of log negative binomial regression and log Poisson regression in this situation.

Method

Data setting

Rate calculation

Since the total death is gradually growing, comparing the absolute death number change in two law backgrounds could be affected. Therefore, as the same thing the author did, a death rate is calculated by the total death divide by the total person-year at risk for some linear regression calculation in this assignment.

Analysis processes

At the first step, log (mean) and log (standard deviation) of the outcome measure (total non-firearm death) and their stratified results are calculated to see whether the outcome could be recognized as a Poisson distribution. If the outcome is in Poisson distribution, the log(mean) and log (standard deviation) should be similar.

The second step is to calculate the mean and 95% confidence interval of the total non-firearm death rate in two law backgrounds (before 1997 and after 1997). Three solutions are attempt to get the result. The first one is to treat the means of non-firearm death rate before and after the law change are in two sample distributions (t distribution). Therefore, the standard error of the means could be calculated by standard deviation divided by square root of n into two periods of time, where n means that number of means in two periods of time. The degree of freedom is equal to n – 1. The formula is shown as (1).

The second solution of calculating the mean and its 95%confidence interval is by linear regression. The model is shown as (2).

The third solution will use log negative binomial regression to calculate the mean and confidence interval of the total non-firearm death rate before and after the law change. The model is shown as (3). (in Negative Binomial Regression)

By assuming law change is an effect modifier of the relationship between time (year) and total non-firearm death rate, three models shown in the article has been fitted. The formula is shown as (4) – (6). (in Negative Binomial Regression)

A log Poisson regression is also fitted to compare with model The model formula is shown as (7).

Model (6) and model (7) are compared by Akaike information criterion (AIC), log (likelihood of regression), diagnose plots and regression curve with residuals. In order to compare the intercept change at the year of the law update, I will use ‘(year - 1996)’ to replace the original ‘year’ in the model (6) and model (7).

Software:

The software I used for calculation and analysis in this assignment are R software 3.6.1 and MS Office Excel 2019.

R packages and tools used: R markdown; MASS; ggplot2; plotrix; plyr; countreg; gridExtra

Results

The total log mean and log standard deviation for total non-firearm deaths rate is 2.41 and 0.32. The log mean and log standard deviation before the law change for total non-firearm deaths rate is 2.36 and 0.27.

The log mean and log standard deviation after the law change for total non-firearm deaths rate is 2.46 and 0.17.

Log mean and log standard deviation of outcome is not similar. Therefore, we cannot assume that the log outcome is in a Poisson distribution.

Mean calculation and interpret.

Solution 1 gave us the average rate of total non-firearm death before the law change is 10.61 (95% CI: 8.64, 12.57) deaths per 100000 person-years. The average rate of total non-firearm death before the law change is 11.80 (95% CI: 9.83, 13.76) deaths per 100000 person-years.

Solution 2 gave us the average rate of total non-firearm death before the law change is 10.61 (95% CI: 10.00, 11.21) deaths per 100000 person-years. The average rate of total non-firearm death before the law change is 11.80 (95% CI: 11.19, 12.40) deaths per 100000 person-years.

Solution 1 gave us the average rate of total non-firearm death before the law change is 10.61 (95% CI: 10.09, 11.16) deaths per 100000 person-years. The average rate of total non-firearm death before the law change is 11.79 (95% CI: 11.21, 12.41) deaths per 100000 person-years.

A plot has generated to describe the mean calculation from three solutions.

Fig 1. Results of three solutions to calculate mean non-firearm death rate before and after the law change

Table 1. Regression result

From the result, we can know that in the year of 1979 to 1996 before the law change, the average trend of total non-firearm death rate is 1.002 (rate ratio) from model (4), while in the year of 1997 to 2013 after the law change, the trend of total non-firearm death rate is 0.986 (rate ratio). The change of this difference in log of the rate ratio is -0.0348 (the change of slope of two period log(rate ratio)) from model 6 and this difference is statistically significant.

Fig 2. Predicted Values of Model (6) (Left) and Model (7) (Right)

Fig 3. Diagnostic Plots of Model (6) (Left 4 plots) and Model (7) (Right 4 Plots)

Fig 4. Hang plots of Model (6) (Left) and Model (7) (Right)

Discussion

Compare with the paper’s result

The total non- firearm death results of Chapman, et al is copied as follows. The mean trend of death rate before the law change is equal to exp (0.0212) = 1.021 and its confidence interval has been shown above. The mean trend of death rate after the law change is equal to exp (0.0212 – 0.0348) = 0.986. The 95% confidence interval of this trend is exp (0.0157 – 0.0348) = 0.981 and exp (0.0226 – 0.0348) = 0.992, which is calculated by adding the coefficient of ‘Law’ into the confidence interval of coefficient of (Year - 1996). RT and RL are equal to the rate ratio at the law change and the exponential of the slope difference between the two status which has been shown above in the table.

Fig 5. Original results from the paper of Chapman, et al

The association between separate model and combined model

The reason that I didn’t do a separate log Poisson regression before and after the law change is because that they are equivalent with the model (7). Let’s look at model (4) – (6) as an example. If we substitute the Law value in the model (6) as 0 and 1, which is also the value I generated in the dataset, the model will look like this:

We can see that these two separated models look quite similar with model (4) and model (5). Actually, they are the same. Law = 0 equals to year < 1997, and Law = 1 equals to year >= 1997. If we add the β of Year * Law in model (6) with the β of Year in model (6), we could get the same value of Year’s β in model (5). If we do not add anything to the β of Year in model (6), it equals with the β of Year in model (4). In fact, the β of Year * Law in model (6) describes the differences between the Years’ beta of model (4) and model (5), and we can additionally notice the standard error and p-value of these difference in model (6). That’s why I don’t have to try another separated model for Poisson regression.

Model comparison

From AIC, we can notice that the log negative binomial model fits better than log Poisson regression.

The Log Likelihood of Negative Binomial model is higher than log Poisson, which also means the model (7) fits better.

From the estimating lines (Fig 2.) the two models provided quite same estimating curve, the only difference I noticed is that the end of the blue line (total non-firearm death rate before law change) (in the green circle) in the log Poisson regression is a little bit higher than the log negative binomial regression.

The diagnostic plots of the two models (Fig 3.) are almost the same, the only difference I noticed is in the Q-Q plots. It seems that the error term in log negative binomial regression is better normally distributed than the log Poisson regression.

Limitation

It’s understandable that the rate after the law change is still higher because the rate is just starting to decreasing after the low change by the result of the regressions.

Fig 6. Histogram of total non-firearm death rate in two interested period

However, what we should expect from the law change is that the death rate of firearm could be lower. So, what about the non-firearm deaths? Shall it increase because fewer people will be killed by gunshot? Or shall it be stable because people could still be alive if they avoid the gunshot? Or shall it decrease because people are healthier and healthier? We need a comparison. If we have a global or other comparable total non-firearm death rate, we could know whether the law has actually changed something. If naturally the total non-firearm death rate is decreased after 1996 if some grate medical product has been made, the curve could be same without the law change. After comparing with a counterfactual rate, we could take a deeper insight in to the reason for total non-firearm deaths started to decrease after the law change.

If we do not know the background of the law change, the curve of death rate could be seemed very normal, just a little peak in 1996. Actually, the most obvious change in the raw curve is around 1998 and after 2005. But we do not know whether something important happened at that time. Even there is a cause of raw curve changing happed in the year 1996, we could not distinguish whether it was the law upgrade or other potential mediators and confounders.

The last thing I want to discuss is that the confidence intervals in the model (7) (Log Poisson Regression) is narrower than the model (6) (Log Negative Binomial Regression). However, both the AIC and log-likelihood show that the log negative binomial regression is better. That is because although log Poisson regression is more precise in this case because of its strict distribution assumption, it lost accuracy compare to the negative binomial regression.

Conclusion

This assignment has totally repeated the result of Chapman, et al in the analysis of total non-firearm death rate that the trend of the rate has statistical significantly changed after the year of 1996. From 1979 - 1996, the mean rate of total non-firearm death rate was 10.61 (95%CI, 10.09 - 11.16) per 100,000 person-year (annual trend 1.021, 95%CI: 1.016 - 1.026), whereas from 1997 - 2013, the mean rate of total non-firearm deaths rate was 11.79 (95%CI: 11.21, 12.41) per 100,000 person-year (annual trend 0.986, 95%CI: 0.981, 0.992).

Besides, this assignment compared Log Negative Binomial Regression’s and Log Poisson regression’s performance under this circumstance. The result shows that Log Negative Binomial Regression is better than Log Poisson regression in this case.

Reference:

[1] Chapman, S., Alpers, P., & Jones, M. (2016). Association between gun law reforms and intentional firearm deaths in Australia, 1979-2013. Jama, 316(3), 291-299.

Repeat Academic Survival Analysis

Author: Ruoyan Han

Code and data for this case is available at: https://github.com/Ry2an/case_code/tree/master/bios623as3

Introduction:

This practicing case is going to repeat partial work of Wondwossen, et al’s paper1.Their paper is focusing on whether the mortality is different of tuberculosis patients who also have HIV when receiving cART treatment at different time points (first week, fourth week and eighth week). This case is mainly repeating Figure 2 and Table 2 of the paper, which included survival analysis by using Kaplan Meier function and Proportional Hazard approach with several corvariates. In addition, residuals of different models are shown and interpreted in this document.

Methods

Data Source:

The dataset used in this paper is included in the supplement of Wondwossen, et al’s paper.

Software:

R software 3.6.1

Package: survival, survminer

Analysis Process:

- Kaplan Meier curve :

To repeat the Figure 2 of the original paper, survfit() and survdiff() from R package ‘survival’ are used to calculate. The group setting of Kaplan Meier approach is by three different time of receiving cART. The plot of survival curve and risk table is generated by ggsurvplot() function of package ‘survminer’.

- Log-rank test:

A log rank test is implemented by survdiff() function under R package ‘survival’ to tell whether the survival curve of treatments happened in the week 1, week 4 and week 8 are different with each other.

Proportional Hazard Analysis:

Univariate Analysis:

Four models are implemented separately by only enrolling treatment, whether CD4 cell count is less than 50 per microliter, whether albumin is less than 3 grams per deciliter and Body Mass Index in the models. This analysis uses coxph() function under R package ‘survival’. The models are listed as following (1 - 4):

- Multivariable Analysis:

One model is fitted by enrolling all covariates mentioned in the univariate analysis. The model is shown as following (5):

- Residuals Analysis:

Martingale, Deviance and Scaled Schoenfeld Residual are drawn in R. Smoothed lines are drawn by the smooth.spline() function in R on each graphs. Additionally, Schoenfeld Residuals are also shown by cox.zph() function under ‘survival’ package in R.

Results

Fig 1. Survival Curve and Risk Table of Kaplan Meier Approach

From the plot, we can see that the survival curve of people who received cART treatment in 8th week remains staying on the top of other two curves in the study period. Curves of ‘week 1’ and ‘week 4’ treatments have some crosses before the first 10 weeks. Then the curves of ‘week 1’ keeps staying at the bottom of the three curves.

Table 1. Result of Log - Rank Test

| Treatment | N | Observed | Expected | (O-E)^2/E | (O-E)^2/V |

|---|---|---|---|---|---|

| week1 | 163 | 27.00 | 20.90 | 1.78 | 2.68 |

| week4 | 160 | 20.00 | 21.56 | 0.11 | 0.17 |

| week8 | 155 | 17.00 | 21.54 | 0.96 | 1.47 |

| Chi-Square Value | 2.89 | Degree of Freedom | 2.00 | p-value | 0.24 |

Table 2. Estimated Values of Univariable Models (1 - 4) and the Multivariable Models (5)

The results of univariate analysis show that the hazard ratio of ‘week 8’ group compared by ‘week 1’ group and ‘week 4’ group are both not statistically significant by 1 (both parameters not statistically significant by 0). However, parameter of CD4, Albumin conditions and BMI are statistically significant than 0 at 0.05 critical criteria.

In the multivariable analysis, the conditions of the p-values for covariates are roughly the same with the univariate analysis. Both parameter of ‘week 1’ and ‘week 4’ treatments are not statistically significant by 0. The parameter of CD4, Albumin and conditions are statistically significant than 0 at 0.05 critical criteria. The only difference happened in the parameter ‘BMI’. It is significant at 0.1 critical criteria but not significant at 0.05 critical criteria.

Fig 2. Scaled Schenfeld Residual for Each Covariates in Multivariable Analysis

Fig 3. Matingale Residuals and Deviance Residuals

All of the residuals lying close to ‘0’. For Schenfeld residuals, we can the confidence intervals are always contain 0 for each covariates. This indicates that the multivariable model has a good fit so that the error term could stay in the distribution assumed by the model.

Discussion

There are several discrepancy between my results with the original paper. In the univariate analysis multivariable analysis, the regression coefficient of ‘CD4’ and ‘Albumin’ are not same with the paper. Coefficient of ‘Week 1’ are not same in the multivariable analysis. I think the reason is because of the data from the supplement of the paper. Although I analyzed with people who lost follow up before the treatment by considering the intention to treat approach, which was the same as the authors did in their paper, we can see that there are discrepancy between the number of people who died or quit before the treatment. In the paper, the number of three groups (‘week1’, ‘week4’ and ‘week8’) who received the treatment is 149, 147 and 135, while from the data they are 150, 146, and 137. This could lead the survival probability of three treatments different with the original paper at least when first event happens. Besides, In this assignment, I didn’t include ‘Hepatotoxicity requiring interruption of TB therapy’ as a covariate in the model, which could also lead different results.

Table 3. Comparation Between Hazard Ratio in Previous Model and in the Original Paper

| Covariates | Univariate Model | Multivariable Model | ||||||

|---|---|---|---|---|---|---|---|---|

| Hazard Ratio | HR From Paper | p-value | p-value from paper | Hazard Ratio | HR From Paper | p-value | p-value from paper | |

| Week 1 | 1.64 | 1.6 | 0.110 | 0.1 | 1.67 | 1.5 | 0.104 | 0.2 |

| Week 4 | 1.18 | 1.2 | 0.620 | 0.6 | 1.20 | 1.2 | 0.588 | 0.6 |

| CD4 cell < 50 | 1.97 | 3.3 | 0.007 | 0.001 | 1.69 | 2.7 | 0.041 | 0.001 |

| Albumin < 3 | 2.04 | 2.8 | 0.005 | 0.001 | 1.75 | 2.3 | 0.033 | 0.02 |

| BMI | 0.90 | 0.9 | 0.025 | 0.0 | 0.92 | 0.9 | 0.079 | 0.04 |

Although the parameters of different treatments are not significant in the model, we can still see a trend among three treatments: Hazard Ratio: week 1 : week 8 = 1.7; week 4 : week 8 = 1.2; week 8 : week 8 = 1. It is obvious that by controlling with other covariates, the later the patient receive the treatments, the higher of the survival probability could be. But it’s just not statistically significant.

In my opinion, there could be two solutions to figure out whether there is a trend between treatment time point and survival probability. The first one is to increase the sample size. I will use the parameter of ‘week 1’ in the multivariable analysis as an example. The standard error of this parameter is 0.314, which means only when the regression coefficient is larger than about 0.314 * 1.96 = 0.615 (negatively calculate is the same mechanism), it could be statistically significant at 0.05 critical criteria. That means the hazard ratio compare ‘week 8’ with ‘week 1’ should be larger than exp(0.615) = 1.85. However, as far as I am concerned, 1.5, or 1.2 are still meaningful results for the context. It’s just the study do not have enough power to prove it is statistically significant. In another words, if the standard error of ‘week 1’ could reduced to about 0.511 / 1.96 = 0.261, the result could be statistically significant at 0.05 critical criteria. We know that in the ‘week 1’ group, there are 163 participants, which means the variance of the regression coefficient in target population could be estimated as 0.314 * (163)^0.5 = 4.01. If we want the standard error (the variance of the sample mean of the regression coefficient from target population) to be less than 0.261, the sample size of the treatment group should be more than (4.01 / 0.261)^2 = 236. It is not a huge number, if the study could also increase the number of participants in ‘week 8’, the number could be lesser.

Another solution is to find another approach by assuming the outcome variable do have an order, with stronger assumption, the parameters could be farther to null hypothesis.

Conclusion:

From current results, we cannot deny the hypothesis that treatments in three different times could lead same survival curve, which means the hazard ratio of ‘week 1’ and ‘week 4’ compare to ‘week 8’ are same with 1. However, from the result, we could notice that other covariates like baseline CD4, Albumin and BMI status could be more related to different cumulative survival probability by time in the target population.

Reference:

[1] Amogne, W., Aderaye, G., Habtewold, A., Yimer, G., Makonnen, E., Worku, A., … & Lindquist, L. (2015). Efficacy and safety of antiretroviral therapy initiated one week after tuberculosis therapy in patients with CD4 counts < 200 cells/μL: TB-HAART Study, a randomized clinical trial. PLoS One, 10(5), e0122587.

Using Longitudinal Models To Analyze Steam Games

This is the final assignment of course BIOS 623 at CUNY GSPHHP in Fall 2019 Author: Ruoyan Han

Introduction

Video games, as an emerging merchandise, are usually selling their copies through online stores and physical storage disks. Before a game marked by a price and selling on markets, it should be developed by a developer group and be published by a publisher company. The game prices set by publisher could contain the cost of making the games and advertising fees, which is related to the quality of the games. Steam is the most famous video game digital distribution service developed by Valve Corporation. Most game publishers on the world publish soft copies of games on Steam game store. In the online store, developer, publisher, price, and customers’ reviews are recorded and updated on each game’s web-page.

Overall rating is a count number of all negative reviews (comments) and positive reviews (comments), which in other words is total review numbers (Fig 1). This value should be positively related to sells volume, because on Steam, only players who bought the game can give comments on the web page (one comment per player). The actual selling volume are hidden from the published data I acquired, so that I chose this as dependent variable, which could be considered as a proxy of overall selling volume. In cross-sectional analyses, it is hard to directly analyze the relationship between the game prices with overall ratings. Because the games are not independent with each other, famous publishers and developers could always have some fans love whatever game they made, while small companies may not sell their games well even the quality of the games they made aren’t that worse. Besides, the volume between different publisher are not the same, larger publisher could have more game published in the online stores, which may bias the relationship between price and the game ratings.

Fig 1. Different Reviews (Negative and Positive) of a Game Called “Foxhole” on Steam Game Store

In this analysis, I will use different longitudinal models (linear mixed effect models, fixed effect models and generalized estimating equation) to analyze the relationship between game prices and overall ratings in steam online stores among different game publishers. From the results of this analysis, game publishers could see whether the game prices settings is positively or negatively related with overall ratings. And so that they could have a deeper understanding of requirement from player on the view of games prices when they want to publish new games in future.

Method

Data Process

Data Source

Data for this analysis is created by Nik Davis and published on kaggle website [1]. The data collected all available games in U.S. steam online game store in May, 2019. The data is gathered through SteamSpy, a website uses an application programming interface (API) to the Steam software distribution service owned by Valve Corporation to estimate the number of sales of software titles offered on the service created by Sergey Galyonkin [2].

There are several fields in the dataset I acquired and I will use in this analysis: Publisher ID; Game ID; English (whether the game support English Language), Release Date; Overall Ratings and Price of each game.

Publisher enrolling

To use longitudinal models, multiple published records are needed for each publisher. Besides, small publisher companies who only published two or three games may not show a mature pattern between game price and overall ratings. Therefore, by creating a histogram and looking the distribution of counts of published game, I decided to use publishers who published more than eight games in the analysis.(Fig 2)

I also excluded free game records in the dataset, although, I reviewed the distribution of published game price (Fig 3) and it dose not show obvious ceiling effect or zero-inflated distribution. It is because that mostly, the commercial mode of free games are not same with the paid games. They usually sell extra components in the game which did not mention in the data. And so that it may bias the relationship between game prices and overall ratings. Please notice that after removed free games, there could be some publishers have game counts lower than eight.

I also added 0.1 to overall ratings for all games in case that some games’ overall rating is 0, which may not able to do the log transformation. (I would chose to use the log transformation because of the results of descriptive statistics (Fig 4).)

Analyzing Process

Descriptive Statistics

After data cleaning, basic descriptive plots (including game counts distribution, scatter plots of game price, and overall volume) and values (including correlation value, game counts for each publisher) are created and calculated to determine what kind of formats of independent variable and dependent variable to use in the models and cleaning processes.

Cross-sectional Model

To compare the difference between longitudinal models and cross-sectional model, a linear regression is fitted as a comparison. The model is written as following:

$log(\textrm{Overall Ratings}_i)=\beta_0+\beta_1*\textrm{Price}_i+\xi_i$,

The following models are longitudinal models I fitted for the data:

Longitudinal Models (LME)

$log(\textrm{Overall Ratings}_{ij}) = \beta_0 + b_i + \beta_1*(\textrm{Price}_{ij}) + \xi_{ij}$,

$b_i \in N(0, \delta)$, $i\in\textrm{Publishers}$, (LME-1)

Linear Mixed Effect Model - Random Intercept and Slope

$log(\textrm{Overall Ratings}_{ij}) = \beta_0 + b_{i1} + \beta_1*(b_{i2} + \textrm{Price}_{ij}) + \xi_{ij}$,

$b_{i1} \in N(0, \delta_1)$, $b_{i2} \in N(0, \delta_2)$, $i\in\textrm{Publishers}$, $j \in {\textrm{Games of Each Publisher}}$, (LME-2)

Non linear Relationship

$log(\textrm{Overall Ratings}_{ij}) = \beta_0 + b_i + \beta_1(\textrm{Price}_{ij}^2) + \beta_2(\textrm{Price}_{ij}) + \beta_3*(\textrm{Price}_{ij}^{0.5})+ \xi_{ij}$,

$b_i \in N(0, \delta)$, $i\in\textrm{Publishers}$, $j \in {\textrm{Games of Each Publisher}}$, (LME-3)

Effect measurement modifier and confounding effect of whether the game have English version

$log(\textrm{Overall Ratings}_{ij}) = \beta_0 + b_i + \beta_1 (\textrm{Price}_{ij}) + \beta_2 (\textrm{English}_{ij}) + \beta_3 (\textrm{Price}_{ij} \textrm{English}_{ij}) + \xi_{ij}$,

$b_i \in N(0, \delta)$, $i\in\textrm{Publishers}$, $j \in {\textrm{Games of Each Publisher}}$, (LME-4)

Confouder of released date

$log(\textrm{Overall Ratings}_{ij}) = \beta_0 + b_i + \beta_1 (\textrm{Price}_{ij}) + \beta_2 (\textrm{Released Date}_{ij}) + \xi_{ij}$,

$b_i \in N(0, \delta)$, $i\in\textrm{Publishers}$, $j \in {\textrm{Games of Each Publisher}}$, (LME-5)

Estimating line

The estimated value of LME models are calculated and combined with the scatter plots.

Fixed Effect Model (FEM)

Fixed effect models in this analysis are used to test whether the assumption of LME models (random intercept and random slope) are applicable. Rather than focusing the estimated parameter of ‘price’ in the FEM models, I would pay more attention of the distributions of fixed terms in the models. Distribution of fixed intercepts and slopes are plotted to see whether they are normally distributed. If they are normally distributed, we could use a stronger assumption and use LME the models to fit the data.

Intercept:

$log(\textrm{Overall Ratings}_{ij}) = \alpha_i + \beta_1*(\textrm{Price}_{ij}) + \xi_i$,

$i\in\textrm{Publishers}$, $j \in {\textrm{Games of Each Publisher}}$, (FEM-1)

Slope:

$log(\textrm{Overall Ratings}_{ij}) = \beta_0 + \beta_1 (\textrm{Price}_{ij}) + \beta_2 (\alpha_i * \textrm{Price}_{ij}) + \xi_i$,

$i\in\textrm{Publishers}$, $j \in {\textrm{Games of Each Publisher}}$, (FEM-2)

For the “fixed slopes”, I mean the actually slope of price fitted by this fixed effect model for each publisher.

$\textrm{Fixed Slope} = \beta_1+ \beta_2 * \alpha_i$

I wanted to use the offset() function in the regression of avoid the existence of β2. However, the program did not run well on this. So I still kept the β2. Fortunately, in this case, the existence of β2 does not affect the calculation of ‘fixed slopes’.

Generalized Estimating Equation (GEE)

In GEE model, I will use the original overall ratings data because GEE model could handle discreet data. Since the GEE model need same number of records for each publisher. To have a better understanding of correlation matrix, again, I chose the number of game counts in the dataset by the game count distribution (Fig 13). Finally, a subset of GEE model is created by choosing publishers published equal or more than 5 games. And 5 games are randomly chosen into the subset. There are 323 publishers and 1615 games are in the subset.

Before starting to fit the GEE model. Correlation Matrix of 5 games among publishers are calculated and plotted to determine what correlation matrix to use in the GEE model. Unfortunately, due to technical reason, although I tested the correlation matrix and checked the mean and standard deviation of overall ratings are same or not, I still chose to use Poisson distribution and “exchange” correlation matrix.

$log(\lambda_{ij})= \beta_0 + \beta_1*(\textrm{Price}_{ij})$,

$\textrm{Overall ratings}_{ij} \in Poisson(\lambda_{ij})$,

$i\in\textrm{Publishers}$, $j \in {\textrm{Games of Each Publisher}}$

Tools

Tools I used in this analysis including R software 3.6.1, packages: ggplot2, lattice, gridExtra, nlme, lme4, geepack, stargazer[3], and ggpubr. Microsoft Excel are also used in some calculations.

Results

Data Process

In the raw data, game counts for each publisher are generated and plotted.

In order to find a proper criteria to remove publishers only publisher one game or published too less game to bias the pattern of prices and overall ratings. From the plot, we can notice that there is a peak around log(counts) = 2, so that I decided to enroll publishers who have published more than exp(2) ≈ 8.

Note: I added 0.1 in the free game prices to generate this plot, otherwise the log price for free games are negative infinity.

From the plot, we can see the distribution of game price is that most game log prices are between 1 and 3. And also there is another peak of game frequency for free games and game prices less than 1 dollar.

The publisher include criteria are created based on the results above. In raw data, their are 14137 game publisher and 27075 published games. Among them, 324 publishers and their 7299 games are selected to be analysis.

In the subset for analysis, distribution of independent variable and dependent variable are plotted as following:

From the plot, we can see that price and mean price do not have an obvious distribution. The original overall ratings have some extremely high value cases, and skewed to the right. The log of overall rating is kind of a unimodal distribution. Therefore, in the longitudinal models, except GEE models (for discrete outcome), I would use the log of overall ratings as the dependent variable.

From this plot, we can see that there is a weak correlation between log of overall ratings and price. Especially, over 20 dollars, the smoothed line is almost straight. The correlation for original overall ratings is not obvious.

Analyze Process

As a comparison, a cross-sectional model is fitted.

Table 1. Result of cross-sectional model

| Parameter | Estimation | Standard Error | P-value |

|---|---|---|---|

| Intercept | 3.58 | 0.031 | <0.001 |

| Price | 0.11 | 0.002 | <0.001 |

| R-square | 0.1834 |

From the results, we can see that the price is positively related to log of overall ratings (p- value < 0.05). However, the R-square is not large, which means the residuals are relatively huge in this model. Other confounders may affect the association.

Table 2. Result of LME Models

| Parameter (Standard Error) | LME-1 | LME-2 | LME-3 | LME-4 | LME-5 |

|---|---|---|---|---|---|

| Price | 0.064*** | 0.058*** | 0.137*** | 0.006 | 0.091*** |

| (Price) | 0.003 | 0.007 | 0.028 | 0.260 | 0.003 |

| Price^0.5 | -0.279** | ||||

| (Price^0.5) | 0.134 | ||||

| Price^2 | -0.001*** | ||||

| (Price^2) | 0.0003 | ||||

| English | 0.351 | ||||

| (English) | 0.250 | ||||

| Price*English | 0.056*** | ||||

| (Price*English) | 0.016 | ||||

| Number of Date to “2019-11-31” | 0.001*** | ||||

| (Number of Date to “2019-11-31”) | 0.00002 | ||||

| Intercept | 3.906*** | 3.932*** | 4.146*** | 3.569*** | 2.720*** |

| (Intercept) | 0.084 | 0.087 | 0.174 | 0.026 | 0.078 |

| —- | —- | —- | —- | —- | —- |

| Random Intercept (Standard Deviation) | 1.43 | 1.43 | 1.43 | 1.44 | 1.18 |

| Random Slope (Standard Deviation) | 0.07 | ||||

| —- | —- | —- | —- | —- | —- |

| Observations | 7299 | 7299 | 7299 | 7299 | 7299 |

| Log Likelihood | -12,942.01 | -12,863.12 | -12,945.80 | -12,927.43 | -12,383.77 |

| Akaike Inf. Crit. | 25,892.02 | 25,738.23 | 25,903.59 | 25,866.86 | 24,777.55 |

| Bayesian Inf. Crit. | 25,919.60 | 25,779.60 | 25,944.96 | 25,908.23 | 24,812.02 |

Note: (*p <0.1); (**p <0.05); (***p <0.01)

Note: the red lines mean estimated average intercept and slopes. The blues lines are 95% interval of random intercepts and slopes.

Table 3. Fixed Effect Models

| FEM-1 | FEM-2 | |||||

|---|---|---|---|---|---|---|

| Covariates | Estimated Value | Standard Error | P-Value | Estimated Value | Standard Error | P-value |

| price | 0.060 | 0.003 | <0.001 | -0.157 | 0.0356 | 0.6587 |

| Intercept | 3.964 | 0.7923 | <0.001 | |||

| —- | —- | —- | —- | —- | —- | —- |

| Mean of Fixed Term | 3.93 | 0.26 | ||||

| Standard deviation of Fixed Term | 1.49 | 3.26 |

From the results, we can see that both of the fixed intercepts and slopes are quite normally distributed.

Before using GEE model, I generated a subset which contain all the 323 publishers who have more than 5 non-free games in the data and randomly picked 5 games for each publisher. Then randomly picked 100 publishers to fit the GEE model. The reason of why I chose 5 is because the following distribution of free game counts:

To choose what kind of correlated matrix to use in the GEE model, I plotted the correlation of games in five prices. When calculated in the correlation matrix, for each publisher, there could be duplicate prices for publishers. I randomly gave order for same prices.

Note: ‘or’ means ‘Overall Ratings’, and ‘p*’ means the rank of five prices

Table 4. Generalized Estimating Equation

| Estimated Value | Standard Error | P-value | |

|---|---|---|---|

| price | 0.0410 | 0.013 | 0.001 |

| Intercept | 6.732 | 0.386 | <0.001 |

Discussion

What are overall ratings indicating? What the models tell the game publishers?

Overall ratings may not be the most import measurement for game publishers, but actually it is the most important variable in the dataset I acquired. Firstly, as mentioned in introduction, the number of overall ratings is positively related to the selling volume. On the other hand, if there are lots of comments, publishers could get many reviews of the game, which could also help publishers and developers editing the game. Therefore, for each game, publishers do expect more comments / higher overall rating. The results of this analysis could help publishers expecting the overall ratings value (the number of total comments) by the setting of game price.

On average, for each publisher, the higher price one game published with, the more comments (higher overall ratings) is expected to acquire. However, this not means that a game with infinity price would have infinity overall rating. From LME models of testing non-linearity (LME-3), and with other covariates (LME4, LME-5) should that there are other factors effecting the relationship between game price and overall ratings. Besides, publishers cannot based on the results to set the game prices unreasonable high. The results can only tell us games with higher price could have higher overall ratings. Actually speaking, to receive higher overall ratings, the publishers should publish games that worth that higher prices.

Which model is the best to describe the relationship between game price and selling volume?

Since there will be many models discussed in this part, I will give my answer at first. In my opinion, the linear effect model with random intercept and random slope describes the relationship in a best way. Firstly, compare with the cross sectional model, longitudinal models could control for the difference between each publisher, sot hat it could reduce the confounding effect between game prices and overall ratings. The regression coefficient of price in cross-sectional model is higher than the longitudinal models, which means that the proportion of publishers who have more overall ratings is higher in the dataset.

Secondly, for generalized estimating equation, I put too many adjustments in that model. Because GEE model require each publisher have same amount of published games, I removed the publishers who have less than five games in the analyzing dataset and randomly chose five games for publishers who have more than five games. Besides, I should use the “unstructured” correlation model to fit the data. However, it would cost too much times to finish it. And at last, I still use “exchangeable” correlation matrix in the model. And the original count data isn’t a good dependent variable in this case.

Thirdly, in the fixed effect models, we can see that the fixed intercepts and slopes are quite normally distributed (Fig 9, Fig 10), which means we can make a stronger hypothesis that this intercepts and slopes are normally distributed.

Also from AIC and the Log likelihoods, the LME model with random intercept and random slope was still the best one among models without other covariates.

Whether we should controlling released time in the model?