This practicing project is going to crawl published infectious disease data from official website of health department of Shanghai (上海市卫生与计划生育委员会).

This project is finished in 2016. I don’t know whether their website has been updated or not. The name of the department has already changed from “卫计委” to “卫健委”.

However, the code should be valuable.

Basic Processes

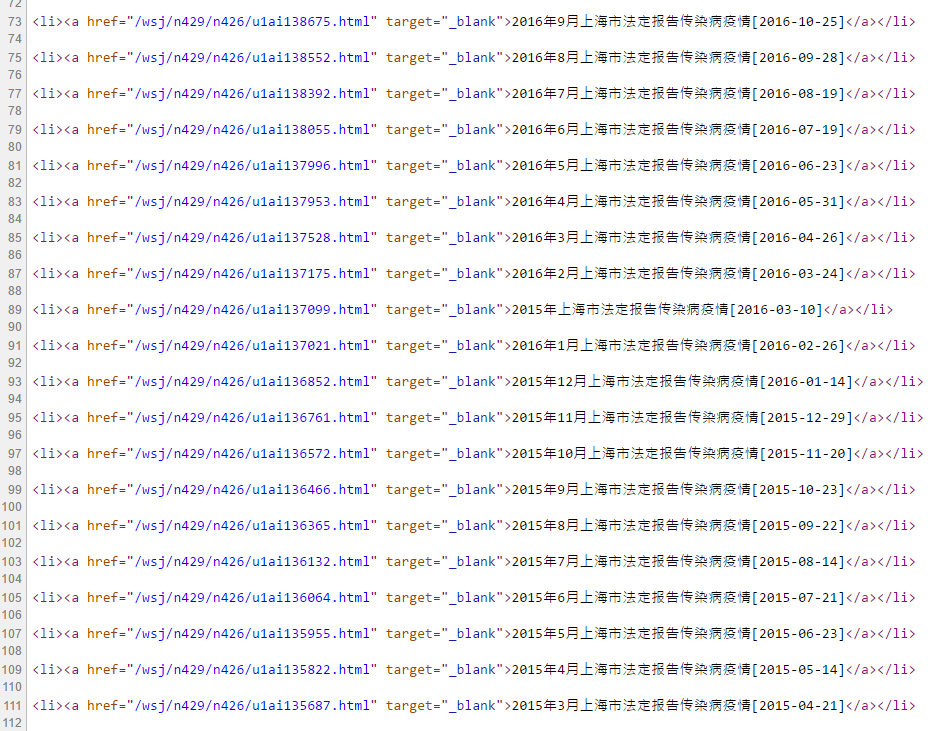

- On the official sites, there is a special page where saved all history reports.

From this picture, we can see there are for pages of the url. So the following processes are clear:

Save the html file of the four pages.

Extract urls from the four pages by finding the special pattern of saving the urls.

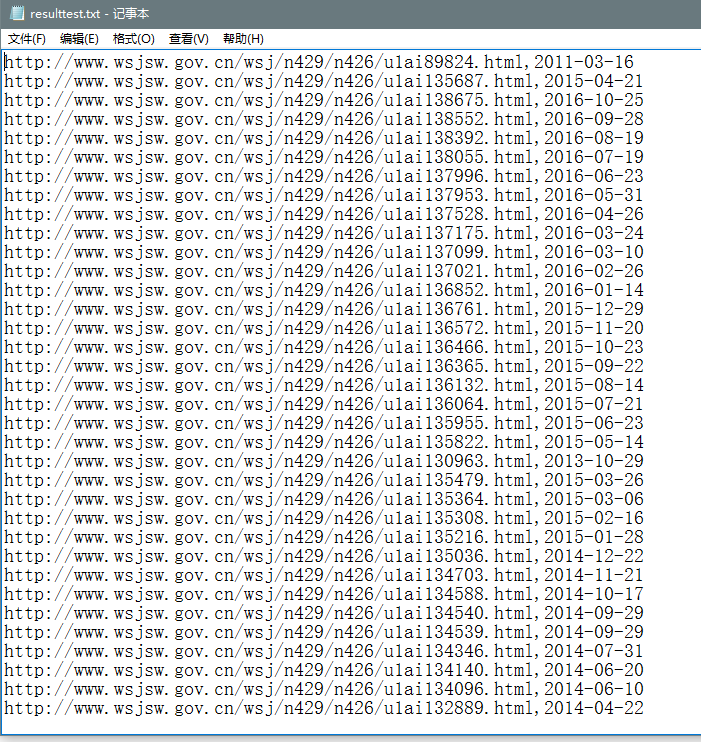

Finally, all urls are recongized and downloaded:

- The next step is download all html pages of the urls, where data is saving.

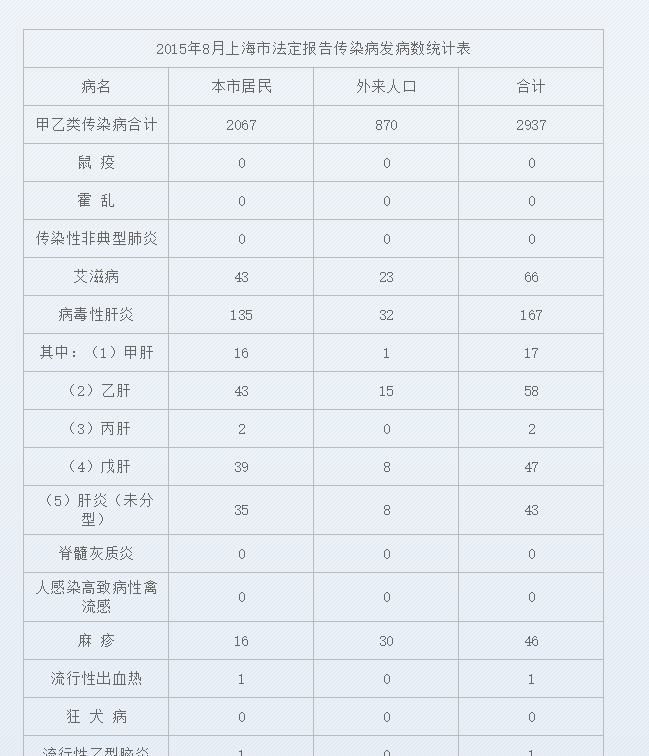

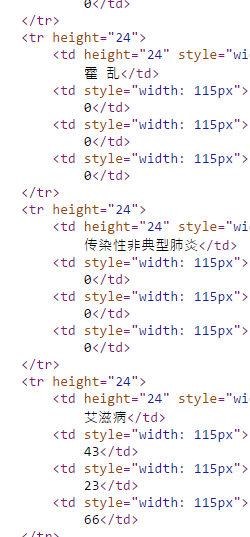

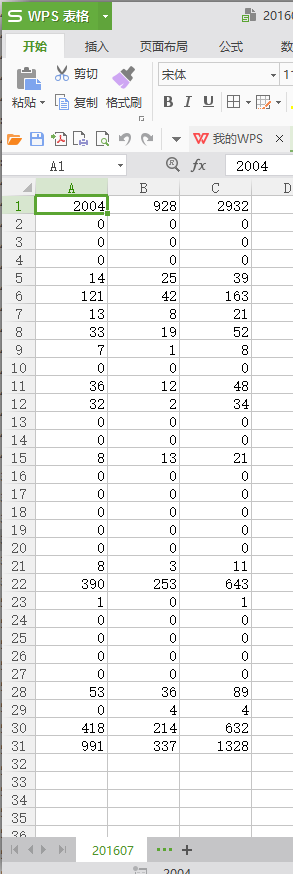

- The last step is recongnizing patterns of the data and save them.

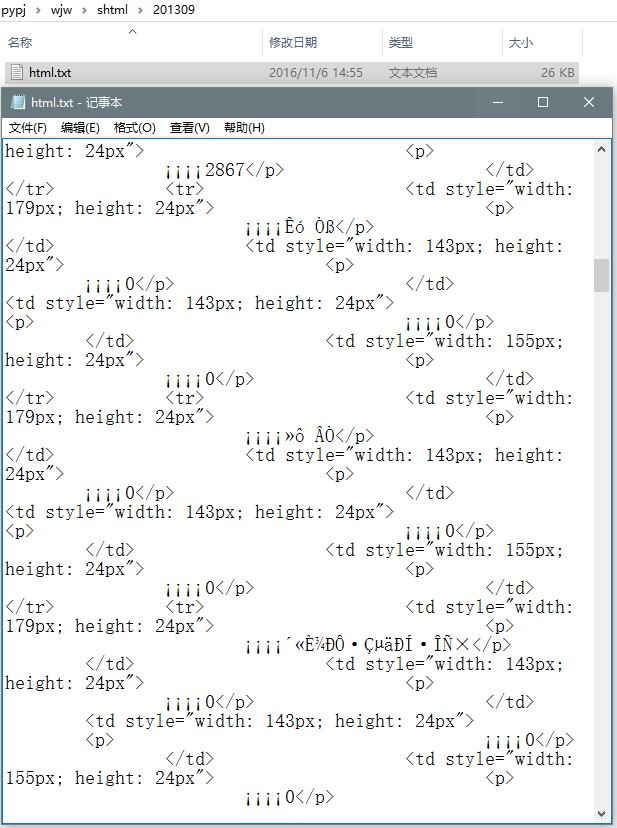

Maybe due to the changes of the staff, there are three patterns of the data. (I found this after trying for a huge times (^o^;).)

But, finally, I made it.

Core Codes

- to get access to the server and require html codes, we should firstly let our program pretent to be a browser. And thus we need to have a “head claim”:

1 | for i in range(0,2): |

- After we get the html file, we need to find the pattern and copy the “matched strings”

Plase notice that this method is called ‘Regular Expression’, ‘regexp’, or ‘正则表达式’. There is a more advanced plug-in in python called ‘Beautiful Soup’.

To understand what’s the meaning of ‘\\d’ or ‘\\w’, please find the tutorials of regexp by yourself.

1 | f = open("D://pypj//wjw//page1.txt") |

To see full codes of this program, please forward to my github reporsitory.

Please use the getpip.py to install pip

Since I am clearing up my code 4 years ago. The code for the 3rd pattern was lost. But the luckly I have enough codes to do the crawling processes.